Earlier this week, I found myself on a short plane ride without wifi— surely one of the last bastions where a person has to content themself without new information for a couple of hours. And so I uncharacteristically had the opportunity to read an entire magazine issue from cover to cover. I picked a good one.

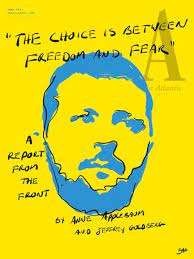

The Atlantic June issue has a portrait of Volodymyr Zelensky in blue and yellow (rendered by Bono— yes, that Bono), and a quotation saying, “The choice is between freedom and fear.” While Jeffrey Goldberg, the editor of the magazine, writes in his note about the outstanding reporting in the magazine (and he himself reported on the Ukraine story that anchors the magazine this month), he doesn’t really reflect on the issue of this particular choice, but I saw it front-and-center throughout the stories. The quotation itself is sort of weirdly positioned (p. 23), and perhaps is meant to comment on the idea that Ukraine represents freedom as an “open, networked, flexible society” whereas Russia represents the past, where “commanders send waves of poorly armed conscripts to be slaughtered.” I might be misreading that though, because the quotation sounds like it wants to be about two choices for Ukraine to be making, whereas the article makes it seems as though freedom and fear are pitted like the two warring nations.

In any event, I suppose that these polarities of fear and freedom could anchor a conversation about nearly any contemporary issue, but nonetheless, in this case, I want to highlight something I noticed about the first and last articles in the issue. They are both warnings of a certain sort, and I think they make an interesting pair on exactly this tension.

The first article, the “Opening Argument,” is written by Ross Andersen. It is a conversation about Artificial Intelligence, and how and why we humans are tempted to give over some complicated decision making to artificial intelligence. It makes me think of how, in conversations and interviews about self-driving cars, human drivers tend to trust artificial intelligence more than is warranted by current technology. The explanation that I have always favored about this is that human beings know— from experience— just how fallible we are as drivers. We get tired, or angry— we squint in in winter afternoons to see our way through intersections. We get text messages or have to reach around to hand a juice box to a child. We reason that a machine that wasn’t subjected to these distractions of emotions or logistics would do better than we would.

In other words, our human experiences lead us to believe that machines are more perfect, less susceptible to cloudy decision making.

Andersen points out that this is a mistake, because AI is really susceptible to other kinds of errors that humans don’t typically make. He writes, “In 2018, AI researchers demonstrated that tiny perturbations in images of animals could fool neural networks into misclassifying a panda as a gibbon.” We’re shocked that what we find so obviously simple is the very thing that trips up a computer, but this is exactly Andersen’s point. He writes, “If AIs encounter novel atmospheric phenomena that weren’t included in their training data, they may hallucinate incoming attacks.” Lest one think that four years is enough time to straighten that out and clear up any possible remaining atmospheric data around, he points out that human decision making in its slowness is perhaps its greatest asset.

AI is phenomenal at extrapolating from past examples. But by the time we get to the point of launching a nuclear missile, it will by very definition be a novel occurrence. There aren’t hundreds of nuclear wars we can train our AI models on. It will take human bravery and curiosity and hopefully diplomacy to pull us back from the brink and avert the disaster that will destroy humanity and leave the earth to the insects. AI is fallible, it can reason badly. In a novel situation, it almost always will, in ways that are surprising to humans.

So it was really a funny coincidence that the last article in the magazine, written by David Brooks, so brilliantly echoed this worry from the opposite angle.

In “The Canadian Way of Death” by David Brooks (yes, that David Brooks), Brooks writes about how liberal values of autonomy led Canada to allow medically aided suicides called MAID, or medically assisted aid in dying. Anticipating the ways that this might be abused, regulations restricted this to people who, Brooks rights:

“Had a serious illness or disability; the patient was in an “advanced state” of decline that could not be reversed; the patient was experiencing unbearable physical or mental suffering; the patient was at the point were natural death had become ‘reasonably foreseeable.’ (86). According to Brooks’ accounting, in just a few short years, many of these restrictions have been loosened, and several high profile cases have demonstrated that people have been approved through this system even though they were lonely or sad, that their suffering was primarily mental. He shows the logical end of autonomy by telling the case of a German man who murdered and ate his consenting victim, and asks what is the value of that consent? Can a single individual really decide to end their life by being eaten, or do we reject that as a society?

While Brooks lays out his plan for adjusting our rights-based liberalism to a gifts-based liberalism, his plea betrays a different suspicion about human decision-making. If he is to be believed, then what he is really arguing is that humans, in all of their complexity, sometimes take in novel information and spit out the wrong answer. He cites the case of his friend, Washington Post columnist Michael Gerson, explaining that “when he was depressed, lying voices took up residence there, spewing out falsehoods he could scarcely see around.” Confronted with a new situation, but pattern matching to everything that happened before, Brooks concludes, “This is not an autonomous, rational mind,” Brooks writes.

And in this worry, it is as though Andersen were worrying out loud about a depressed mind mistaking a panda and a gibbon. Brooks’ depressed person is running broken software and jumped to conclusions, in much the same way that Andersen’s AI has interpreted data and jumped into action without due deliberation. It is of great interest to me that the beginning and ending of the magazine focus on the idea of right information rendered wrong by either brains or computers.

The symmetry between these cases (just to spell it out further) is that both authors are worried about getting the bugs out of the system, of making sure that mistakes aren’t made with bad reasoning. At least Andersen seems to say clearly: although we want to tweak, improve, debug— it may not be as possible as we would believe. And Brooks’ solution is certainly to treat the depression, but also in some way to suspend a person’s full autonomy while we fix their brain from reasoning incorrectly. For Brooks, this dilemma of a poorly-reasoning brain is enough to take down the whole system— depression and its long tail of consequences enough to turn over the entire philosophy of Mills’ liberalism. According to Brooks, we ought not let broken systems (even rational human actors under the influence of depression) make permanent conclusions with big consequences.

But as I read these two arguments together, I’m wondering if this is really the right framing after all. We are taking mistake-making to be a feature of the decision maker, and spending time thinking through what is the right person/machine to be approaching a problem to best solve it.

But perhaps mistake-making is not a feature of the decision-maker, but a baked-in part of a novel situation. Perhaps a situation that has not arisen before is, by its very nature, susceptible to incorrect conclusions, and destined to summon mistakes. Perfection is for the SATs only. The human experience may well be to bumble through life as new situations arise. If AI gets it wrong as much as we do, maybe it’s not that humans err, so much as erring is part of the way of the entire world. Mistakes are part of the architecture

And with that, maybe, indeed, it all comes back to the quotation on the cover and the choice between freedom and fear. Fear would lead us to treat every decision as a possible bug, insist that there is a possible correct answer that the right system/decider could lead us to. And maybe preservation of human life is always the right answer. On the other hand, freedom could accept the possiblity of wrongness. The consequences of that may indeed be too high (get it wrong with nuclear war? Are you kidding!) so perhaps that isn’t a tolerable goal, but if we don’t accept that, we will be forced to confront the reality that we have, after all, chosen to live with fear.

Ariella Radwin is a writer and cultural commentator who lives in Palo Alto, California. She reads The Atlantic with great aplomb, although rarely in full.